A few weeks ago, I needed to setup a public bucket to host some images for a business service. Using CloudFormation, I setup the bucket and ensured it was publicly available. I then uploaded a test image. Pointing my browser at the object URL, I was dismayed to receive an access denied error! After a few minutes of head scratching, we received a notification in our Slack system that a public bucket had been created. Not only that, but the public access had been removed! Yes, I had been tripped up by our own automated protection! How can you automate your S3 bucket security?

What’s wrong with public S3 buckets?

Nothing! If you need to allow general access to public assets, then a public bucket is a perfectly acceptable, possibly desirable, solution. All objects in a public bucket are by default accessible to the world. You get to decide whether that is read only or read and write. Most likely, you will want to make all objects readable but not writeable by the general public!

The problem comes when you make a bucket with sensitive content publicly available. There have been many high profile cases where buckets have unintentionally been made public and sensitive data exposed. It’s not any inherit problem with S3 buckets. In fact, far from it. The AWS S3 console will show you if a bucket is publicly accessible or not. When you create a new bucket, the default configuration is to block public access.

In fact, if you never need to directly share the content of your buckets publicly, then you can set the public block policy at the account level so that it will apply to all buckets owned by the account. You could then expose the content through some other endpoint such as via CloudFront or an application, if required

Block my buckets, please!

How do you make sure your buckets are not publicly exposed without manually checking? Automation is your friend for S3 bucket security!

Fortunately, everything you need is built into the AWS ecosystem. You just need to join the dots to provide a solution. At RedBear, we use the following solution to protect our buckets and block access.

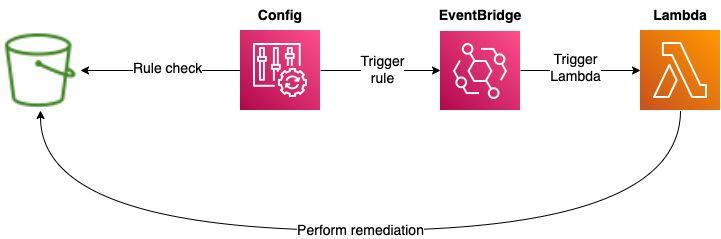

AWS Config rules are in place to check for public read or write access to a bucket. The rule check is triggered an any change to an S3 bucket, including new bucket creation. If a bucket if found that is publicly accessible, Config will generate an event that is picked up by an Amazon EventBridge rule. This rule will trigger an AWS Lambda function that will remediation the configuration of the target bucket by removing the public access.

With this solution, we don’t need to manually check that a bucket has been publicly exposed as the automation will block that for us. We can all sleep soundly at night!

I need a public bucket but I like this automation

What if you do need to make specific buckets publicly available? How do you manage that safely without making it a management overhead?

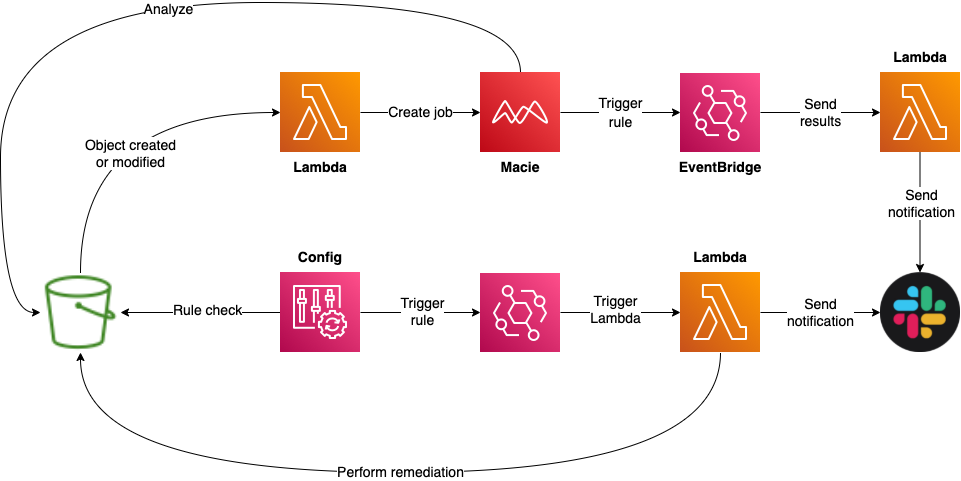

In the above solution, we also use tags to determine if a bucket is supposed to be public or not. In the case of a tagged bucket, the automated blocking is not applied. However, for these buckets, we want to make sure that only public information is shared via the bucket. We need to update our solution to enable this.

To provide this, we add a trigger to a public bucket so that whenever an object is created or changed, we send a notification to a Lambda function. This function will kick off an Amazon Macie job to scan the bucket (or you already may have Macie configured for public buckets!) and look for potentially sensitive information such as PII. We then notify the bucket owner of the change and the results.

At this point, we leave the response to the bucket owner to decide what to do. Of course, we can’t really fully automate this part as the machinery doesn’t know what should or should not be there. Sometimes you need a human eye!

It may seem obvious but you should adopt a least privileged approach and set up your policies so that only people who should be able to modify or upload objects to a public bucket have the access to do so!

We now have an automated S3 Bucket security solution.

Of course, nothing is ever perfect or finished with security. For this kind of solution to work, you need to make sure that you only create public assets when absolutely needed. You also need to tightly control who can create and tag buckets as public. Once more, access policy and adopting a least privileged approach is critical.

Is there anything else to consider for S3 bucket security?

In general, we would recommend that all buckets are encrypted with AWS KMS keys. We always use customer managed KMS keys as they provide the ability to control the key policy (i.e. who can access or use a key!). Of course, for public buckets, this is not especially useful as the objects will be available to end users anyway!

However, you should consider adding the following options.

- Enable object versioning so that you can regress versions should something happen to an object;

- Enable access logging of all requests to the bucket;

- Enforce access via HTTPS only via bucket policy.

Finally, don’t forget to backup your bucket. AWS Backup recently added support for backing up S3 or you can use object replication!

Wrapping up

Public buckets really are a very useful tool. However, you need to think about your S3 bucket security and make use of automation to manage it!

If you would like to understand more about this solution, please get in contact.