The Sydney Cloud Security Conference, powered by AWS, recently ran in Sydney. The focus for the event was “Navigating the future with Security”. It’s a timely subject, with seemingly AI solutions everywhere you turn. These solutions bring their own security challenges. RedBear was at the event as both attendees and sponsors. In between a heap of excellent conversations with customers current and to be, I managed to catch some of the content and key themes.

AI isn’t a fad

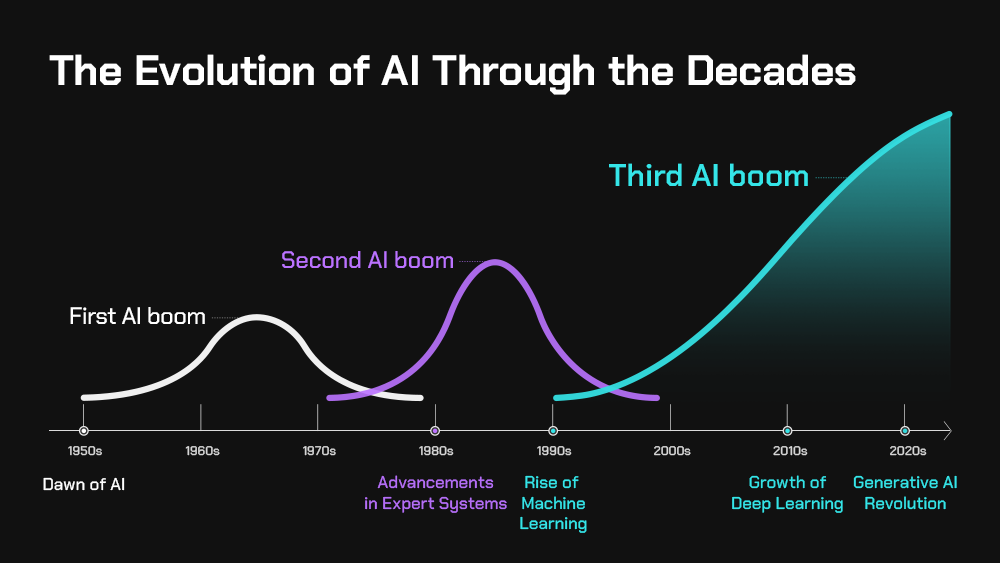

Yes, the hype may have faded a little as people wait for true killer AI based applications, but it’s not going away anytime. In fact, it’s rapidly evolving. If you had only been paying attention to the IT media for the last few years, you would think that AI was invented in 2022!

In fact, it started in the 1950s, way before the compute platform was capable of implementing the ideas that were forming at the time. As a teenager in the 80s, it seemed to be all about a computer being able to beat a chess master. In the last 10 years, we have seen the rise of machine learning and image recognition that lead to many fantastic use cases, such as automatic detection of sharks using drones and image recognition in medical scanning.

The last few years have seen a massive spike in development and interest of AI solutions due to greater than ever compute power. Couple that with the general availability of platforms, such as Amazon Bedrock, meaning you no longer need to build and train your own models, and you can see why it has taken such a rapid upward trajectory. We’ve rapidly gone from Generative AI (Gen AI) – think chat bots, assistants – to agentic AI and its autonomous automation capability.

Securing AI

For a web application, the same basic principles apply. You still need to deal with inputs and outputs, protect again SQL Inject and Cross-Site Scripting attacks, for example. However, AI powered applications introduce new attack vectors that didn’t previously exist.

From a Gen AI perspective

- Prompt manipulation where attackers try to get the Gen AI app to reveal information it shouldn’t.

- Data access control – in a traditional app, the user role would determine what access they could have to data. With Gen AI, how do you provide the same level of control when the model is trained on the whole data set?

- Make sure that you use guardrails to reduce false responses or inappropriate content in responses.

With Agentic AI

- The AI agent has access to multiple APIs. It’s making decisions and performing actions. Gen AI is essentially read-only, providing content based on a set of data that it can’t manipulate (kind of…). In order to make decisions, AI agents can perform actions, potentially accessing and changing data.

- AI agents connect to far more services (including third party services) than a traditional web application. Protect the identity used by the AI Agent, adopting a zero trust approach with temporary credentials.

- Like Gen AI, only data to which an end user has access should be used by an AI agent to respond or act on behalf of the user. This is especially important for sensitive information.

- Think of the scenario of an AI agent that books travel on your behalf. It will need access to your credit card, calendar (and potentially your contacts), your credentials to bookings sites, your frequent flyer account etc. That’s a lot of trust.

From a penetration testing perspective, it’s almost impossible to test every combination of input/output with AI powered services. Unlike traditional applications, AI is non-deterministic meaning that for a set input, you won’t necessarily get the same output every time. This make test coverage really difficult to determine!

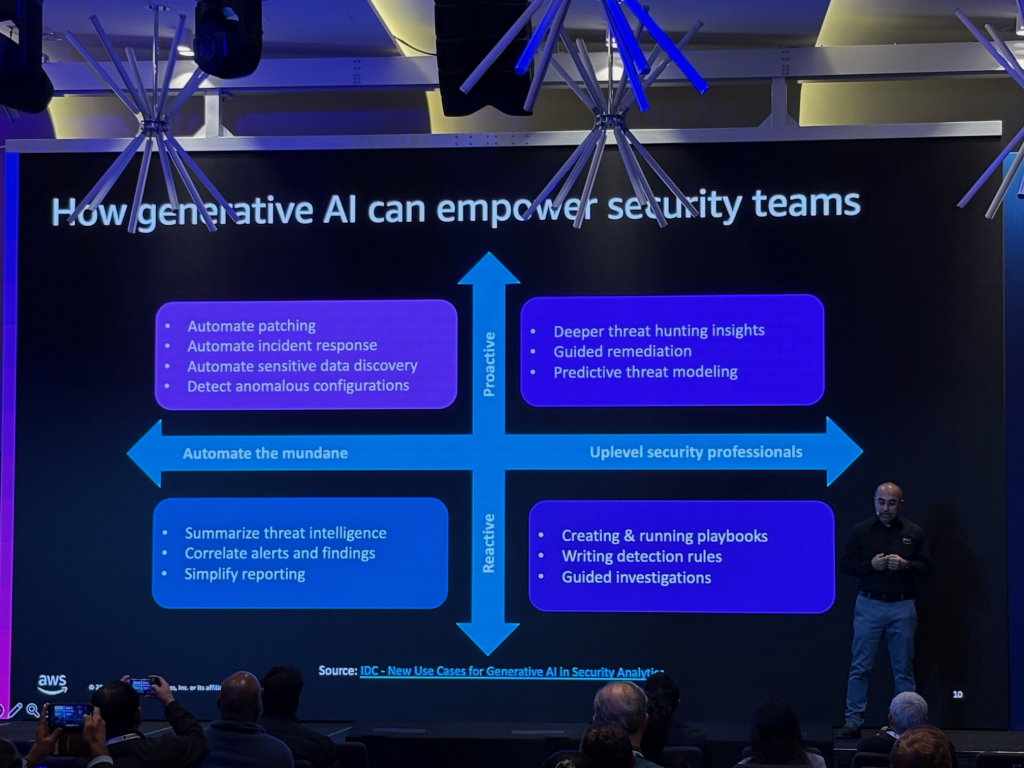

Of course, cybersecurity for AI applications is not only about how we can ensure we don’t introduce more risk. We can also use AI to bolster our security teams, reduce time to remediate and even to make penetration testing more effective.

Security Incident Response

As the great poet of our age Taylor Swift said “If you fail to plan, you plan to fail”.

The AWS Critical Incident Response Team (CIRT) spoke about the need to be prepared for a Cybersecurity incident. Preparation means

- Having a plan or procedure to follow in the event of an incident.

- Making sure the right people are part of the incident response process, including non-technical (legal, PR etc.) and third parties. Technical resources should have pre-provisioned access to relevant systems and data.

- Ensuring that the right data is being captured for investigation and forensics. Importantly, this data needs to be protected from deletion or modification by a malicious actor.

- Importantly, test your response plan through game days to evaluate the readiness of team, tools and processes. Learn, refine and iterate.

Culture of Security

You can have all the tools in the world, but if your teams are not embedding security into your daily practices, it’s always going to be an uphill battle. The traditional model of “the security team” doesn’t work in a fast paced 2025. Training your developers and engineers in security practices means your security team becomes the SME, not the road block. Shift left is a term that has been used as nauseam. Every developer focused security tool has tossed the term around, yet we still see low levels of adoption of the well intended approach. Baking security into your thinking is the only way we can achieve the cybersecurity outcomes that we need to secure our services. The time for the “pen test two weeks before go live” have long since been acceptable.

- Train your teams on cybersecurity practices.

- Give them the tools but integrate them into existing practices so they become an enabler not a blocker.

- Use your security teams as SMEs all the way through the development and deployment cycle.

- Yes, security really is everyone’s job. There will never be enough cybersecurity experts so embed their knowledge as guardrails into your practices.

Conclusion

It was a inspiring day, navigating the future indeed.

If you want to learn more about the day, Cybersecurity in the Cloud or what AI means for your risk posture, contact us at RedBear.